- daniel@udk-berlin.de

- 36 years, Slovak by origin, European by choice

- Bc. in Humanities (Charles University in Prague, CZ) & Bc. in Linguistics (Universite de Nice Sophia-Antipolis, FR)

- certified to use Feuerstein's cognitive Instrumental Enrichement 1 and 2 methods

- Master in Complex Systems : Natural and Artificial Cognition (Ecole Pratique des Hautes Etudes, Paris, FR)

- Double PhD. :: Cybernetics (Slovak University of Technology, SK) and Psychology (Universite Paris 8) defended with the Thesis "Evolutionary models of ontogeny of linguistic categories" - c.f. http://wizzion.com/PP.pdf )

- wizzion.com UG haftungsbeschränkt (now in hibernation)

- UdK Medienhaus IT Admin between december 2014 and july 2018

- ECDF / UdK Digital Education W1 professorship since 1.18.2018

- married, 2 daughters

- developmental psycholinguistics

- child - computer interaction

- constructivist theories of learning and intelligence

- cognitive enrichment and cognitive impoverishment

- child - computer interaction

- machine learning & machine teaching ("Can machines teach?")

- machine morality & roboethics

- natural language processing & computational rhetorics

- history of ideas and technologies

- digital diversity, open source (e.g. Linux) and maker movement

- conservative techno-optimist

- Definition of Digital Education ("education-of" or "education-with" ?)

- Evaluation criteria for e-didactic tools, media and methods

- Parent participation in digital education

Two topics of PRAXIS :

- Evaluation and extension of "Computer Science Unplugged" curricula

- fibel.digital

Frontend is running in chromium which loads index.html which loads all the rest.

Interaction between backend services / sensors and frontend takes place by means of websockets. Every backend service pipes it outputs into pipes located in /dev/fibel, these pipes are created by bunch of gwsocket-daemons launched at startup, config file for service2port associations is in /etc/fibel/sockets.

All necessary frontend code for dealing with sockets is in js/sockets.js

- fibel.digital

- bildung.digital.udk-berlin.de

- Neal Stephenson - The Diamond Age - Or, a Young Lady's Illustrated Primer

- 17.1. Entwurf

- 24.1. Imprint

- 31.1. Format

- 7.2. Cybertext

- 14.2. Fibel

RIGHT (south, north) :: forward in browser history

DOWN (east, west) :: user info

UP (west, east) :: activity info

AIRWHEEL :: zoom

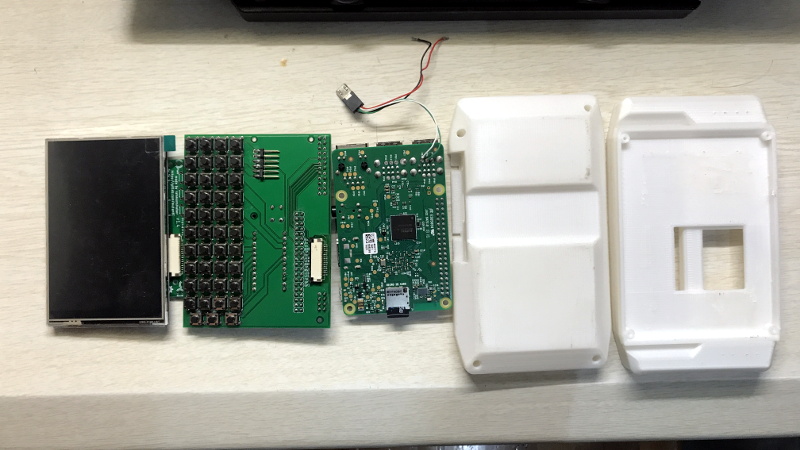

Keywords: digital artefacts, raspberry PI zero, upcycling, make-your-own-device, creativity, touchless man-machine interaction, zone of proximal development, electronic ink, algorithmic drum circle

Phat Dat 1

Speaker Phat 1

Witty pi small 2 1 1

Witty pi big 2

Inky Phat 1

Grove Sound 1

Grove Ultrasound 1

Resistive HDMI 1

Raspi B+ 1 1

Phat Stack 1 1

Skywriter 1 1

E-ink 1

Capacitive HDMI 2

Strompi 1

Daniel - screwdriver

Anna - pen

Kohei - measure

Akif - Swiss knife

- sudo apt-get update && sudo apt-get dist-upgrade

- sudo apt-get install bluealsa

- sudo service bluealsa start

- Switch on your bluetooth device

- sudo bluetoothctl

- scan on

- pair XX:XX:XX:XX:XX:XX (replace the XXXXX with your device ID)

- trust XX:XX:XX:XX:XX:XX

- connect XX:XX:XX:XX:XX:XX

- exit

- aplay -D bluealsa:HCI=hci0,DEV=XX:XX:XX:XX:XX:XX,PROFILE=a2dp /usr/share/sounds/alsa/*

| Date | Topic |

|---|---|

| 11.4 | Introduction |

| 18.4 | Art & Artefacts |

| 25.4 | Tools & instruments |

| 2.5 | Material |

| 9.5 | Modules and components |

| 16.5 | Making the Itty Bitty Beat Box |

| 23.5 | ECDF visit - Wilhelmstrasse 67 |

| 30.5 | NO COURSE (Christihimmelfahrt) |

| 6.6 | Format |

| 13.6 | Shell |

| 20.6 | Berlin Open Lab - Einstein Ufer UdK |

| 27.6 | Optimizing & testing |

| 4.7 | Goal |

- In what domains of human activity do we speak about formats ?

- In these disciplines, what kinds of formats do we know ?

- Can we imagine other types of formats ? What are their advantages ? What are their disadvantages ?

- What kinds of formats should we use ?

- What is modularity ?

- What are modules ?

- What are advantages of a modular system ?

- What are disadvantages of a modular system ?

MODEA #2 knot 4162 (i.e. https://kastalia.medienhaus.udk-berlin.de/4162 )

| WiSe 2018/2019 | Bootstrapping & exploring |

| SoSe 2019 | Playing, specifying, defining |

| WiSe 2019/2020 | E-paper |

| SoSe 2020 | Machine learning, speech technologies, handwriting recognition |

| WiSe 2020/2021 | Testing & optimizing |

| SoSe 2021 | Deploying |

WiSe 2021/2022 |

??? |

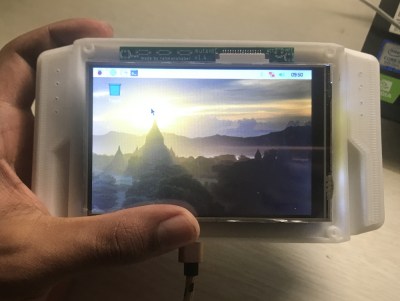

https://mutantc.gitlab.io/index.html

Over the years we’ve seen the Raspberry Pi crammed into almost any piece of hardware you can think of. Frankly, seeing what kind of unusual consumer gadget you can shoehorn a Pi into has become something of a meme in our circles. But the thing we see considerably less of are custom designed practical enclosures which actually play to the Pi’s strengths. Which is a shame, because as the MutantC created by [rahmanshaber] shows, there’s some incredible untapped potential there.

The

MutantC features a QWERTY keyboard and sliding display, and seems more

than a little inspired by early smartphone designs. You know, how they

were before Apple came in and managed to convince every other

manufacturer that there was no future for mobile devices with hardware

keyboards. Unfortunately, hacking sessions will need to remain tethered

as there’s currently no battery in the device. Though this is something

[rahmanshaber] says he’s actively working on.

The

MutantC features a QWERTY keyboard and sliding display, and seems more

than a little inspired by early smartphone designs. You know, how they

were before Apple came in and managed to convince every other

manufacturer that there was no future for mobile devices with hardware

keyboards. Unfortunately, hacking sessions will need to remain tethered

as there’s currently no battery in the device. Though this is something

[rahmanshaber] says he’s actively working on.

The custom PCB in the MutantC will work with either the Pi Zero or the full size variant, but [rahmanshaber] warns that the latest and greatest Pi 4 isn’t supported due to concerns about overheating. Beyond the Pi the parts list is pretty short, and mainly boils down to the 3D printed enclosure and the components required for the QWERTY board: 43 tactile switches and a SparkFun Pro Micro. Everything is open source, so you can have your own boards run off, print your case, and you’ll be well on the way to reliving those two-way pager glory days.

We’re excited to see where such a well documented open source project like MutantC goes from here. While the lack of an internal battery might be a show stopper for some applications, we think the overall form factor here is fantastic. Combined with the knowledge [Brian Benchoff] collected in his quest to perfect the small-scale keyboard, you’d have something very close to the mythical mobile Linux device that hackers have been dreaming of.

Keyboards:

https://hackaday.io/project/158454-mini-piqwerty-usb-keyboard

https://hackaday.com/2019/04/23/reaction-video-build-your-own-custom-fortnite-controller-for-a-raspberry-pi/

- M.Y.O.D. :: Make Your Own device

- Upcycle !

- Explore the "adjacent possible".

- Uniqueness and not mass production.

- daniel at udk-berlin.de

- Room 313, Medienhaus

- Sprechstunden 12:30 - 13:30

Tutors & SHK

- Astrid Kraniger a.kraniger@udk-berlin.de

- Nikoloz Kapanadze nikoloz-kapanadze@medienhaus.udk-berlin.de

- digital primer

- music instrument for algorithmic drum circle

- garden guardian

- digital primer

- light source

| WiSe 2018/2019 | Bootstrapping & exploring |

| SoSe 2019 | Playing, specifying, defining |

| WiSe 2019/2020 | E-paper |

| SoSe 2020 | Machine learning, speech technologies, handwriting recognition |

| WiSe 2020/2021 | Testing & optimizing |

| SoSe 2021 | Deploying |

WiSe 2021/2022 |

??? |

- Introduction & recapitulation

- UNIX warm-up

- Exercise 1: Upload images to hon.local screens

- PaperTTY

- 10-minute break

- Discussion : What would You like to make ?

- Making, coding, sharing

- Make Your Own Device ! (a sub-branch of DIY)

- upcycle & recycle

- Lean ICT & digital sobriety attitude

- natural

- healthy

- ecological

- minimalist

Any why NOT e-paper ?

- e-readers

- notebooks (onyx)

- phones (Mudita)

- our own devices

- Primer :: RaspberryPi Zero, Re-speaker 2-mic array, WittyPi 3, 6-inch e-paper IT8951, 2 x Grove Gesture Recognition systems, Speaker

- Herbarium :: Arduino, 4.3 inch e-Paper UART, 1 x Grove Gesture Recognition

- Your project ::

- Goal-oriented

- Organic

- combining "Gedankenexperimenten" with physical-stuff (i.e. component) permutations

- collaborative

- working with a real-system (advantages ? disadvantages ?)

- tele-computational (derived from Greek τῆλε, tēle, "far")

- command-line based

- collaborative

- connect to "cloud" WLAN (password: cirrocumulus)

- open two terminal windows or tabs on Your computer

- window1:: ssh fibel@fibel1.local

- window2 :: ssh pi@hon.local

- in both windows run: screen -S YOURNAME (e.g. screen -S daniel)

# cd /

# ls /

We start this Friday (24th April) at 10:00 am

In the middle of a battle there is a company of Italian soldiers in the trenches, and a commander who issues the command “Soldiers, attack!” He cries out in a loud and clear voice to make himself heard in the midst of the tumult, but nothing happens, nobody moves. So the commander gets angry and shouts louder: “Soldiers, attack!” Still nobody moves. And since in jokes things have to happen three times for something to stir, he yells even louder: “Soldiers, attack!” At which point there is a response, a tiny voice rising from the trenches, saying appreciatively “Che bella voce!” “What a beautiful voice!” - excerpt from the book 'A Voice and nothing more' by Mladen Dolar

In this course, we are going to make your own digital artefacts in the scope of your personal interests in Voice and Speech.

Throughout the seminar, we will build our own personal speech recognition system based on machine learning which can understand (more specifically „transcribe“) human speech as a medium for our artistic practice. For the first half of the seminar, students will be introduced a domain of Automatic Speech Recognition (ASR) technology, and also diverse ways how speech-to-text (STT) inferences can be realized on non-cloud, local (i.e. edge-computing) architectures. It means that python programming and basic unix command line skills will be involved.

By the time we will have developed our own system, we will dive into making artfacts (media installation, educational device, musical instrument, performative material, sound works, etc, of your choice) where human and machine will communicate in human voice and speech as the second half.

*Please register for the seminar before the semester starts by e-mail.

*Seminar starts on 27.10

*A Raspi 4B and a ReSpeaker 2-Mics Pi HAT will be given to each person during the semester period.

*The seminar takes place time to time at Berlin Open Lab (Einsteinufer 43)

/